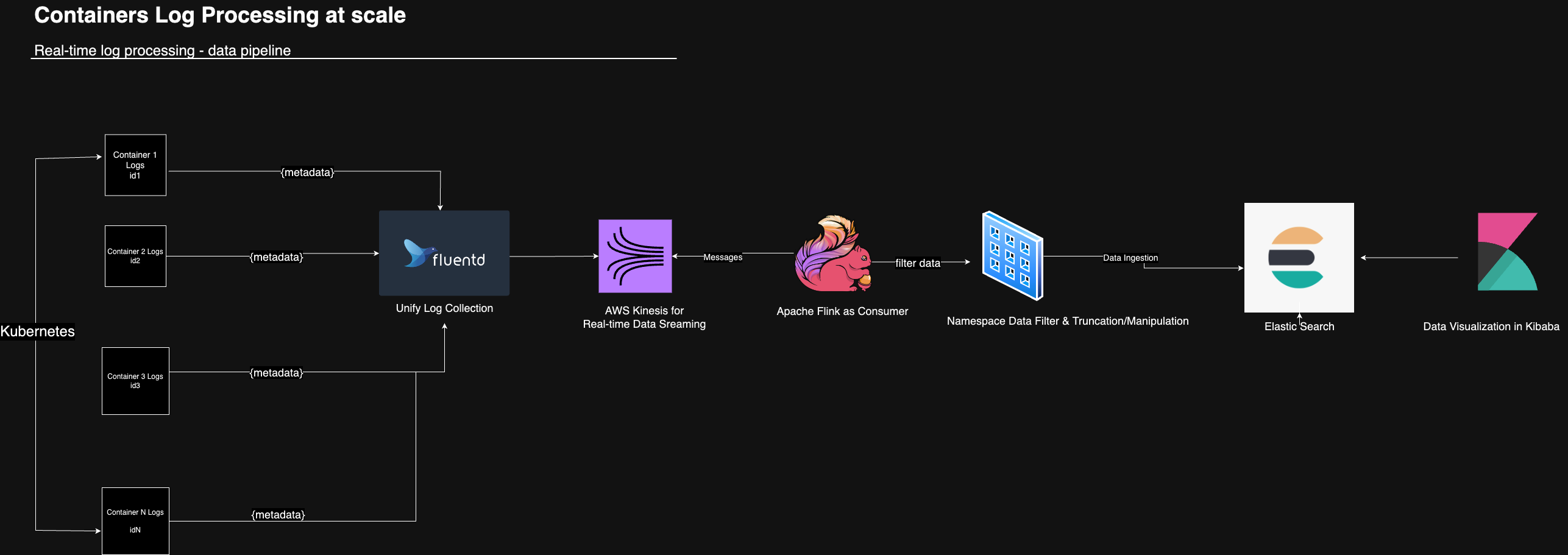

Containers Log Processing Pipeline is a real-time data log processing system designed for internal developers to run/debug their plugins seamlessly. It is responsible for collecting log data from various containers, processing it in real-time, and storing it in a scalable way to debug in a decisive way later. The system utilizes RabbitMQ for log collection, AWS kinesis for data ingestion, Apache Flink for stream processing, and ElasticSearch as a part of the EFK stack for data storage and visualization.

Technologies Used:

- Fluend: Log Collection

- AWS Kinesis: Data ingestion

- Apache Flink in JAVA: Data Stream Processing

- ElasticSearch as a part of EFK stack: Data Storage and Visualization.

- Other tools: Git, Drone for Continuous Integration, Kubernetes for container orchestration.

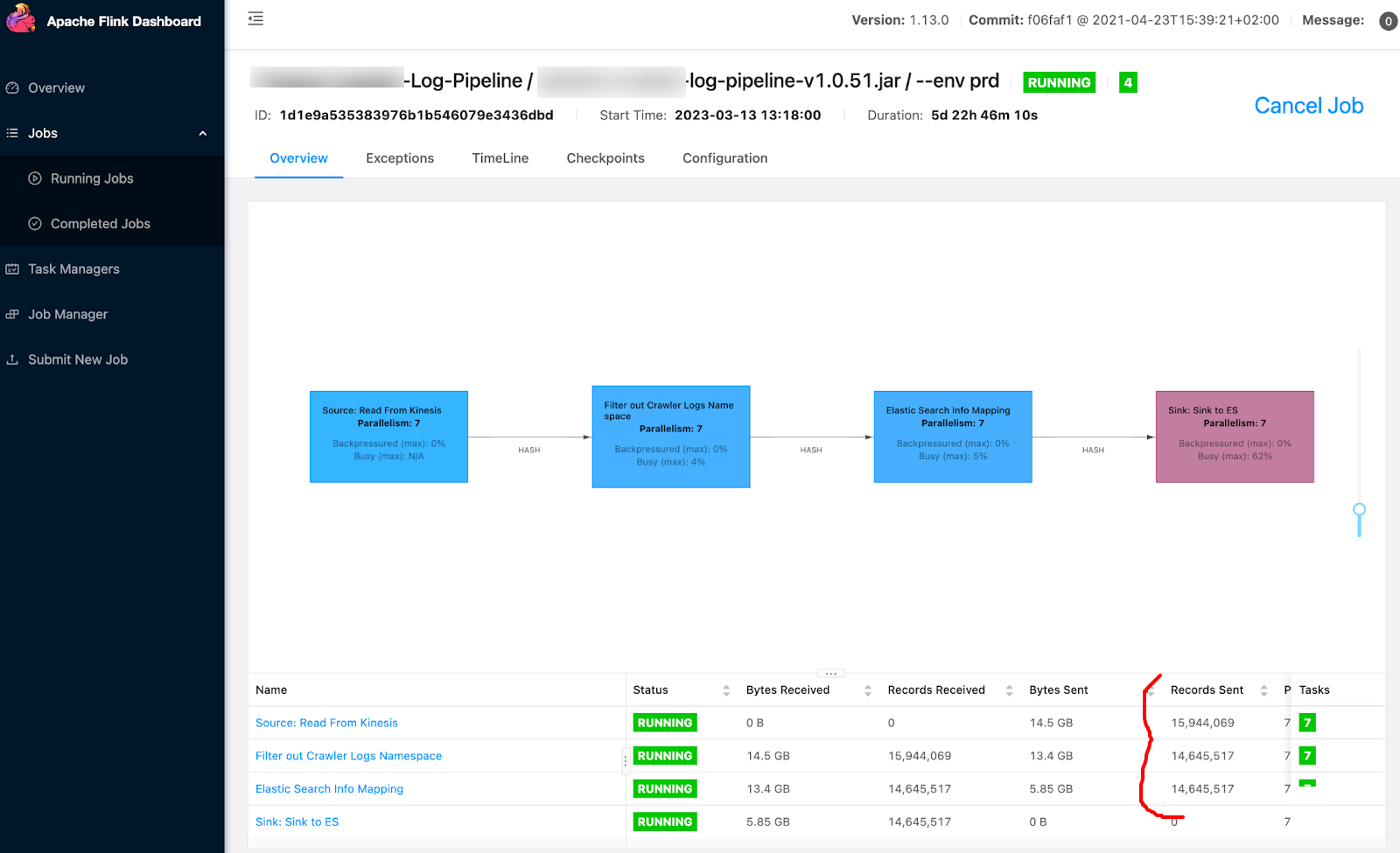

Flink Architecture:

The figure above illustrates, Flink Bolt reads data from Kinesis in real-time, applies some truncation to the data to reduce its size, and then stores it in Elasticsearch, which is a highly scalable open-source search and analytics engine. The truncation is done to reduce the log size and optimize storage space in Elasticsearch.

Here's how the process works:

- The Flink Bolt reads data from Kinesis in real-time.

- The data is then processed, which involves applying the truncation to reduce its size.

- Once the data is truncated and mapped with the keys, it is then sent to Elasticsearch for storage and indexing. Elasticsearch stores the data in a distributed manner and provides fast and efficient search capabilities.

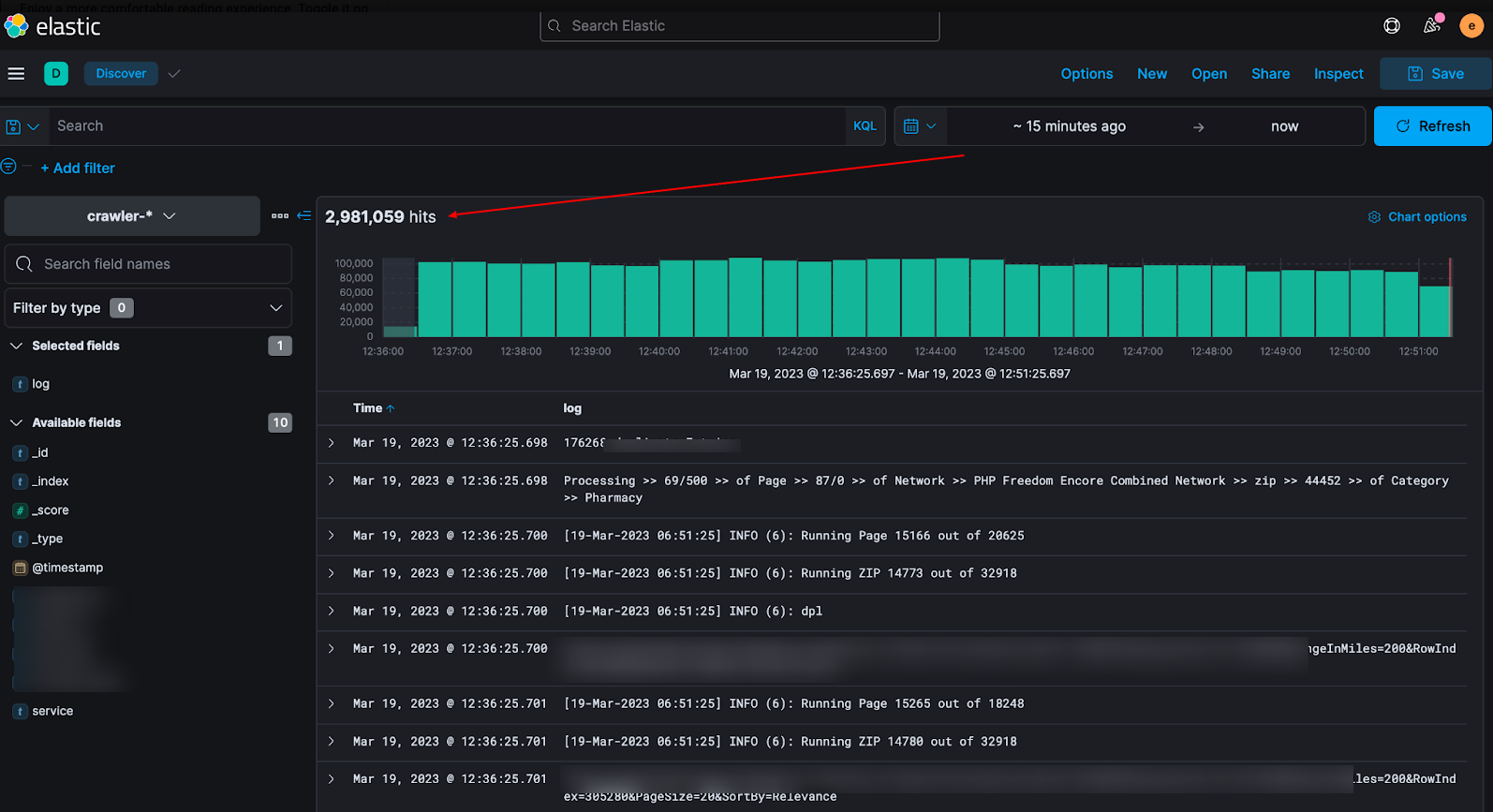

- Finally, the stored logs can be visualized using Kibana as shown below.

Overall, the Flink Bolt is a powerful tool for processing real-time data streams from Kinesis and storing it in Elasticsearch. This allows organizations to gain insights and make data-driven decisions in real-time, as well as improve their data storage and retrieval capabilities.