In my recent Data Engineering project, I leveraged Microsoft Fabric for data standardization, a crucial task that involved processing millions of unstructured records and transforming them into standardized, actionable datasets. The data was stored in OneLake, where I applied various data engineering techniques to ensure consistency and quality before distributing the results to multiple destinations, such as SupabaseDB for storage and Power BI for analytics and visualization.

Key Steps in the Process

-

Data Collection and Standardization:

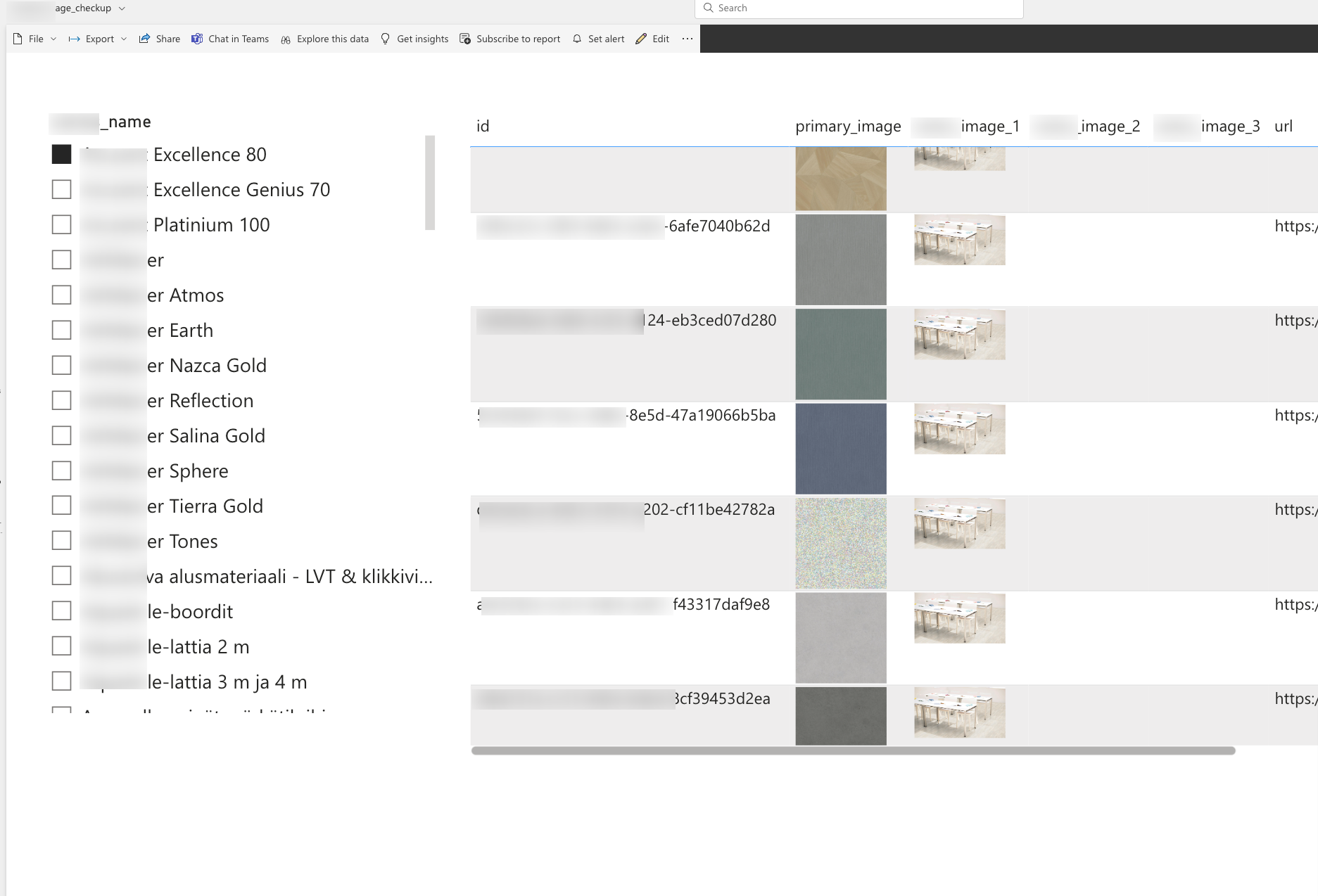

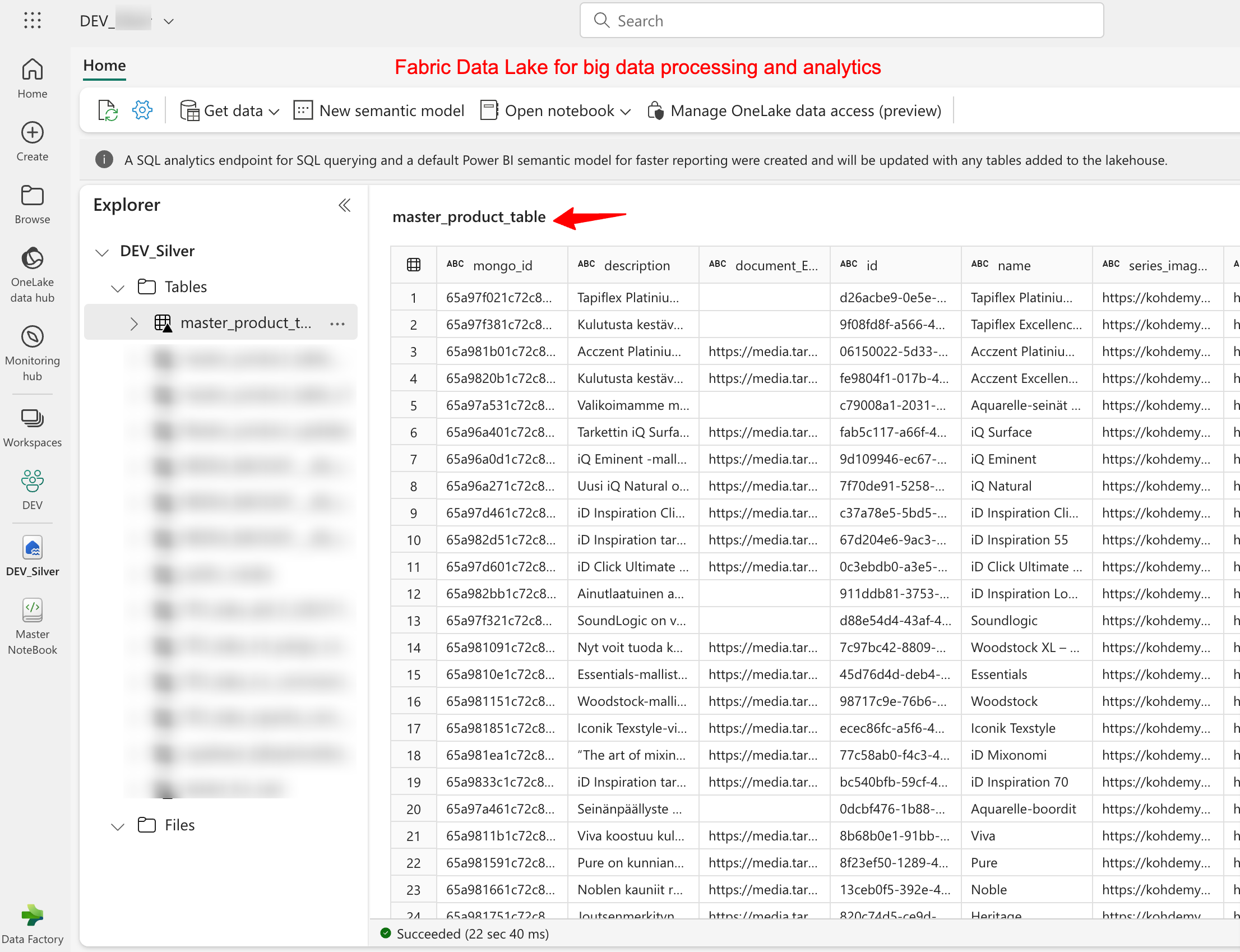

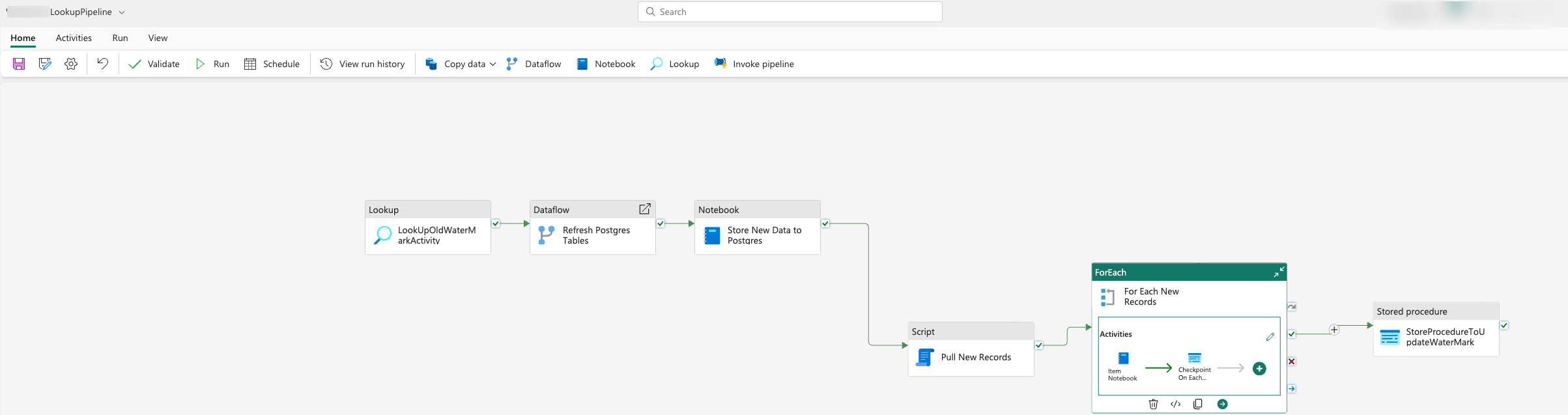

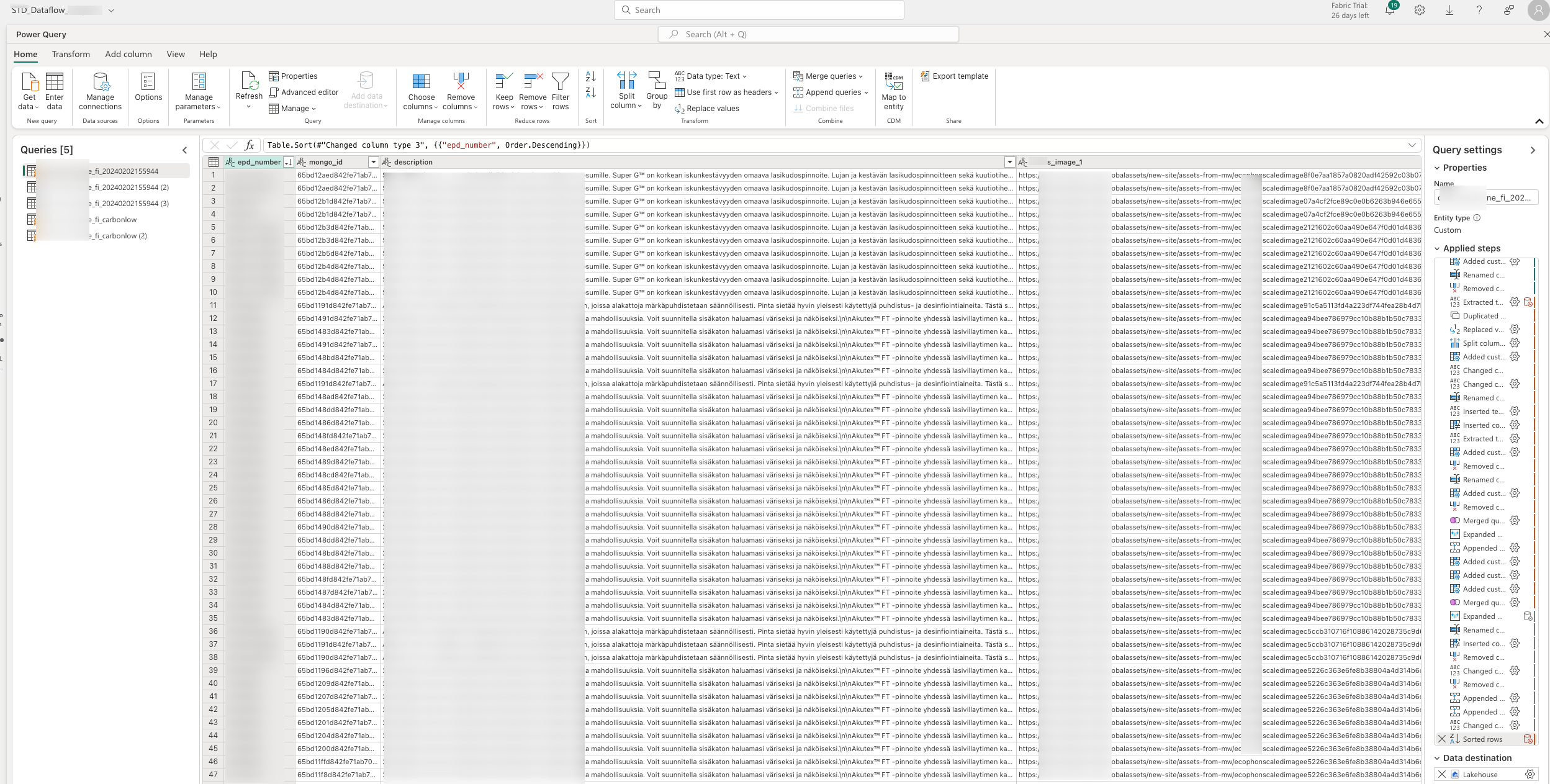

First collected vast amounts of raw, unstructured data from various sources. To standardize each dataset, I created unique dataflows in Microsoft Fabric, named "STD_DataFlow_manufacturer_name". These dataflows were responsible for validating the datasets, ensuring all required columns were present, and filling in missing values where necessary.

-

Handling Outliers and Missing Columns:

During standardization, outliers were identified and removed to maintain data quality. The dataflow automatically added any missing columns, ensuring that the datasets aligned with the expected schema. Where data was incomplete, I implemented logic to infer missing values or flag them for further review.

-

Creating and Merging Series Identifiers:

One of the more complex parts of the process involved generating a "parent_id" for each product, which represents the product's series. To achieve this, I duplicated the entire dataset and extracted unique rows based on the series information. Outliers were removed, and I assigned unique identifiers to the newly created series rows. These were then merged back into the original dataset using "series_name" as the key, updating the master table with this enriched information. -

Publishing and Post-Processing:

Once the data was standardized, filtered, and joined, selected only the required columns and ensured the dataset met quality standards. The final dataset was published to the "product_table" in the lakehouse, where it could be used for further analysis and reporting. These standardized datasets were then pushed to destinations like SupabaseDB for storage and Power BI for visualization and quality assurance.